Pixalate’s inaugural Connected TV Seller Trust Index (CSTI) has sparked discussion regarding the quality of programmatic OTT/CTV advertising and questions regarding our specific methodologies for ranking sellers. We always welcome analysis of our rankings, especially the discourse that inevitably flows from the publication of a new index. However, to enable a free flow of ideas, and spur valid criticism and feedback, we are compelled to correct what we believe are flawed analysis and misguided assumptions – especially about practices that are foundational to our processes and rankings.

There appear to be several core assumptions and implications in SpotX’s blog post last week, entitled “How to Truly Assess CTV Sell-Side Platform Quality and Why Companies like Pixalate Don’t See The Whole Picture,” that are not accurate. (To its credit, SpotX noted that certain concepts of its post were based on assumptions.)

This blog post will address, in significant detail, our CTV Seller Trust Index. For starters, we will correct some of the high-level misconceptions:

- ‘Direct’ does not automatically mean higher quality: That ‘direct’ equals higher quality is a commonly-held misconception, but it’s not true. Prior to the discovery of the Monarch ad fraud scheme, it would have been easier to make this assertion.

- Pixalate is years ahead of other measurement companies in OTT/CTV: Pixalate’s technological capabilities are not hindered by “Audience Lock.”

- Sellers do not need to be Pixalate clients to achieve favorable rankings: The implication that a commercial partnership with Pixalate is required to rank well (or rank at all) is patently false. Pixalate relies upon unadulterated analysis of buy-side data to generate its rankings.

One of the benefits of serving in this industry for so long (remarkably, coming on a decade now) is the opportunity to get to know the many players on both the buy- and sell-side who are continuing to further the evolution of digital advertising. One such entity is SpotX, which, based on what we have seen over the years, is a respected sell-side platform that seems committed to operating with good faith and integrity. Indeed, they have consistently ranked high on our quarterly Seller Trust Indexes (“STIs”) for years, not based on any relationship with us (for they are not a client), but rather based on an objective, holistic methodology that drives our opinions. In short, we have nothing but respect for SpotX and the clear efforts and investments its team puts into maintaining a quality marketplace, which continue to be reflected in its rankings on the STIs.

We were therefore surprised to see the flawed assertions in SpotX’s recent blog post. Since 2014, we have made an unwavering commitment to invest the time, resources, and effort necessary to regularly compile and publish our STIs in a way we believe, with confidence, captures a comprehensive, objective assessment of the industry’s top sellers. While we acknowledge in our STIs that standards and measurements continue to evolve — and we readily concede that we may measure invalid traffic (IVT) differently than other sources — we unreservedly stand behind our opinions in our STIs.

In order to address concerns and misimpressions regarding our process and methodology, we are sharing substantial additional details below regarding how we compile our STIs.

1. Objective Methodology

First and foremost, let us be clear: Sellers are not required to be clients in order to achieve favorable rankings in Seller Trust Indexes. While SpotX does not come out and state otherwise in its blog post, it certainly seems to make this implication. This is flatly untrue. Our methodology is objective, meaning we do not consider the identity of any company when we run our data sets. Period.

Our opinions are based on our objective data sets, which consist predominantly of buy-side open auction programmatic traffic sources. For example, as we shared in a recent Server-Side Ad Insertion (SSAI) webinar, our data sets include over 30,000 programmatic supported apps, over 300 million OTT/CTV devices monitored, and billions of programmatic advertising impressions. We then apply a unique, blind scoring algorithm to this data, weighting various factors (which we expand upon further below) pursuant to an impartial formula derived from industry guidelines, many years of relentless R&D, and discussions with partners, peers, and industry organizations. Our overarching goal is to surface what matters most to ad industry stakeholders. While some may weigh factors differently, or include more or less factors, it cannot be disputed that we make our factors known, and apply an objective process uninfluenced by the presence or absence of any commercial relationship with Pixalate.

Indeed, over the years, it has often been the case that companies ranked high in our STIs have not been clients, and vice versa. Because our methodology is objective and not reliant on the identity of any particular company, this simply does not enter into the analysis. To ensure that no data or entity is ever given preferential treatment, our methodology relies upon buy-side rather than sell-side data. By eschewing our clients’ sell-side data, we utilize an approach designed to avoid even the appearance of preferential treatment. Unfortunately, however, we did not make that point clear in our published STIs, an oversight that we will correct going forward.

Another unfortunate implication from SpotX’s blog post is that Pixalate is somehow willing to disclose some of its other clients’ data sets as part of potential commercial relationships. This, again, is flatly untrue. Pixalate does not share any of its clients’ data with any other clients, in connection with its Seller Trust Indexes or for any purposes.

Now, it is true that we think that our analytics and blocking solutions can provide valuable insights to sellers, which in turn can assist in efforts to improve IVT-related metrics and outcomes. This necessarily involves disclosure to sellers of such sellers’ own analytics data sets (but not any other Pixalate client data) in order to leverage these solutions optimally to improve seller traffic quality. But as we say in our STIs, anyone may be able to increase their score by improving positives such as more unique inventory and traffic sources, and lowering negatives by detecting and filtering ad fraud. You do not need to be a Pixalate client to do so. Taking affirmative steps to mitigate risk and improve quality will always have the potential to help you in a quality-rankings system. But, just so we’re clear: we could not be more confident in, and proud of, our solutions’ abilities to enhance our clients’ efforts to improve their processes and outcomes.

Speaking of which:

2. What We See In The Connected TV/OTT Space That Others Don’t

In its blog post, SpotX addresses the factors that we consider as part of our CTV STI rankings, suggesting for some factors that Pixalate is somehow missing something, or for others that SpotX should be ranked higher based on its own internal assessment of its values and processes. While we have gotten overwhelmingly positive feedback on our STIs over the years, we have also heard similar comments from others.

It is important to emphasize once again what Pixalate is trying to do with our STIs: We are striving to provide unmatched, objective insights into the various factors and considerations that ad industry stakeholders value most. To do that, we must be comprehensive and holistic. We must apply the expertise and wisdom developed over years of R&D, implementation, and industry leadership. We must employ the same capabilities that enabled us to be the first and currently only MRC-accredited company for IVT and Sophisticated Invalid Traffic (SIVT) detection across CTV/OTT/SSAI environments. And, in doing so, our methodology often sees things that others – including our competitors – do not, which can lead to confusion and a sense that we are not giving full shrift to certain isolated factors.

For example, SpotX understandably poses the question of how an indirect seller can rank higher than a “more direct” seller. The simple fact is that there are numerous ways IVT can wreak havoc in a closed supply chain. Infrastructure fraud is one example, where an SSAI vendor double fires impressions to manipulate the measurement process. Another example of such IVT is continuous play, even from “good” OTT/CTV viewers.

In short, sometimes the picture is not as clear as it appears. And that’s where we believe our methodology — not focused on one particular metric or piece of conventional wisdom – provides a much-needed holistic view of what matters to industry stakeholders.

Unfortunately, this holistic view can sometimes lead to misunderstandings about certain aspects of our rankings or invite assumptions that are simply not correct. This is why we provide a glossary in every STI explaining the factors we consider. But we recognize the need for continuing dialogue, so here we provide some additional context – plus a number of clarifications – regarding our CTV Seller Trust Index:

- IVT Score: As SpotX notes, 100% IVT-free is unrealistic. This is because sellers with honest intentions can still unwittingly come to have IVT. And transparency, or the appearance thereof, does not always mean fraud-free, as Pixalate uncovered in connection with such schemes as Monarch and DiCaprio. In a complex ecosystem, players are continually refining their methods and exploits, and it takes more than “common sense” to stay on top of it.

Indeed, if one’s vendor is not accredited for OTT/CTV/SSAI detection, then there is a real chance that both the vendor and its client are not seeing the whole picture. More specifically, if one’s vendor isn’t at the forefront of SIVT detection in emerging areas like OTT/CTV/SSAI detection, despite the best of intentions one may be unwittingly wearing rose-colored glasses that don’t enable one to see the whole picture.

At this stage, MRC accreditations are table stakes, and fraud-free guarantees based on non-MRC-accredited vendors might raise a lot of questions in the buy-side community. (On the plus side, if one were to tie one’s fraud-free guarantee to IVT detection lacking such sophisticated capabilities, seeing through rose-colored glasses might save on payouts!) This is why rankings are fluid across channels and years. As exploits change, detection capabilities must evolve to keep pace.

All of this is a long way of saying that the presence and extent of IVT is not always apparent (even to the sellers themselves), and certainly not always indicative of bad intent. As the currently only MRC-accredited company for omni-channel IVT and SIVT detection, including in CTV/OTT/SSAI environments, we are confident that this is a compelling example of an area where Pixalate can often see what others cannot, sometimes even with respect to sellers’ “more direct” traffic. Obviously, the optimal way in which our confidence could be tested would be for other measurement vendors to publish rankings similar to our STIs and put their methodologies up for public review/critique as we continue to do.

- Household Reach Score: For the reasons detailed in the SSAI Transparency Score section below, the assumption that Pixalate is only measuring a “couple of campaigns” associated with certain seller platforms is wildly off base.

After normalizing the data Pixalate receives, we determine deviations and biases in the data. Pixalate made significant development in a novel set of methodologies to calculate propensity scores, adjust for confounding factors, correct biases, and provide fair comparisons across sellers, as well as calculating reach.

Using this methodology, Pixalate can provide out-of-sample projections that we believe are extremely accurate using bias correction algorithms that act both on the spatial and temporal dimensions. As for SpotX’s statements about its own household reach, we have no reason to quibble with that assertion. Indeed, SpotX scored “straight As” across all three platforms in our CTV STI for Household Reach Score.

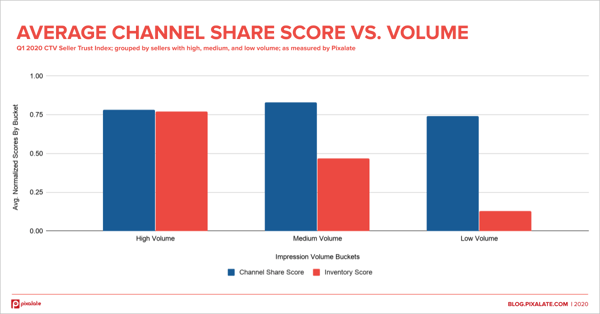

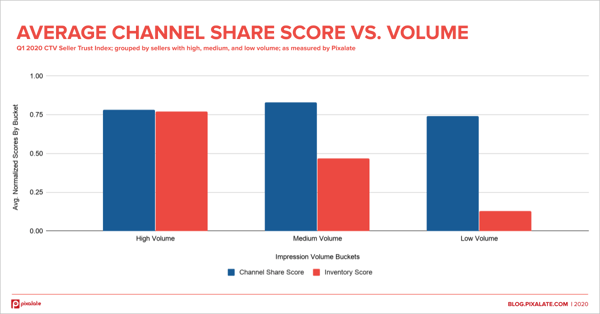

- Channel Share Score: Pixalate calculates the Channel Share Score by taking into account factors including app popularity and traffic volume. In other words, we do not simply assign scores based on the raw number of apps represented by a seller. Instead, we developed a complex metric that captures a notion of overall channel quality.

In the plot below, we show a comparison between the normalized inventory (volume) scores seen across sellers versus their Channel Share Score. As evident from the graph, large inventory volumes do not necessarily imply high Channel Share Scores.

We do not doubt that SpotX and many other top-tier sellers strive to only partner with reputable media owners. We do not see this goal as inconsistent with a high Channel Share Score – SpotX was graded “A” across two platforms for this metric, and “B” for the third.

But again, it bears repeating that this “more direct” approach does not always equate to lower levels of IVT, as Pixalate has uncovered many top brands associated with or otherwise exploited by fraud schemes (see here and here). In other words, like other factors, this one cannot be considered in isolation, but must be viewed holistically.

- SSAI Transparency Score: Here is one area where SpotX unfortunately makes an assumption that is just plainly untrue. SpotX contends that because data is sometimes obfuscated from potential buyers via its Audience Lock environment, Pixalate is unable to accurately measure SSAI transparency since we “do not have a holistic view into the impression served.”

The fact is, such a feature does not impact the data captured by Pixalate when our pixel fires with respect to such impressions; Pixalate still captures all the relevant data obfuscated from buyers in connection with programmatic auctions, including X-Device-* information (such as end-user UA strings), X-Forwarded-For IPs, and more, as per VAST 4.0+ guidelines, in order to ensure identification of the user as granularly as possible. In other words, SpotX’s apparent misguided belief (which, to its credit, SpotX acknowledged was only a belief) that Pixalate may only be able to measure 9% of SpotX inventory could not be further from the truth.

Because Pixalate takes its patent-pending SSAI SIVT detection methodologies seriously and has made substantial investments to become the currently only company accredited across channels highly reliant upon SSAI, it is important to note that passing the correct information (e.g., following industry guidelines and being compliant with “transparent” SSAI) does not necessarily mean that the information passed was associated with an actual valid impression.

Pixalate measures traffic behind “SSAI” proxies in order to collect as much information as possible about the:

- proxy server,

- user behind the proxy, and

- application using the proxy

This includes our patent-pending IPv4-IPv6 co-detection methodology, as well as the collection of well-known and not-so-well-known signals that can characterize an SSAI transaction. We then rank the proxies involved in all the SSAI transactions to determine the validity of impressions originating from the aforementioned.

It's worth noting that Pixalate earned MRC accreditation for 12 distinct SSAI metrics, including:

- Gross SSAI Tracked Ads

- SSAI Tracked Ads

- Net SSAI Tracked Ads

- Gross SSAI Tracked Ads %

- SSAI Tracked Ads %

- Net SSAI Tracked Ads %

- Gross SSAI Transparent Tracked Ads

- SSAI Transparent Tracked Ads

- Net SSAI Transparent Tracked Ads

- Gross SSAI Transparent Tracked Ads %

- SSAI Transparent Tracked Ads %

- Net SSAI Transparent Tracked Ads %

It is also important to reiterate that, SpotX’s Audience Lock solution does not prevent Pixalate from collecting data about the end-user directly through the user, and/or via the purported SSAI proxy, depending on the type of beaconing that is used (i.e., server-side vs. client-side beaconing). Pixalate’s measurement is not impeded by SpotX’s Audience Lock technology, and, indeed, SpotX earned an “A” Grade for its SSAI Transparency Score across the board.

Pixalate, as a measurement and IVT detection and prevention vendor, fully complies with the VAST 4.0+ guidelines that recommend SSAI transparency in order to secure the ecosystem and detect the bad sources, while at the same time identifying the actual user information.

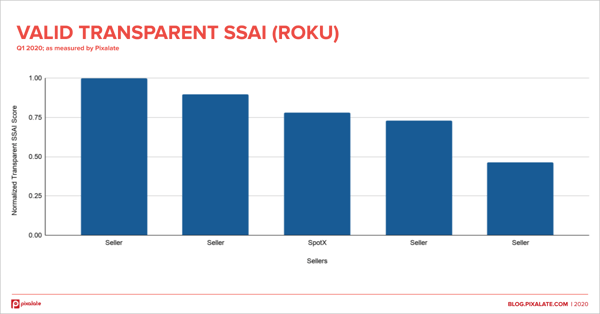

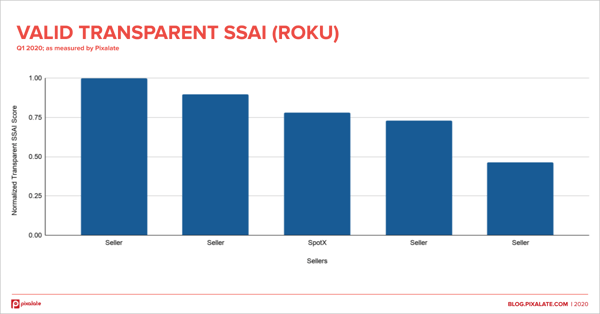

The figure above plots the normalized IVT-free Transparent SSAI scores for the top five sellers of the Roku CSTI where SpotX appears in the top three. If the assertion that Pixalate sees only 9% of SpotX’s CTV inventory were accurate, this would not be possible.

- Channel Integrity Score: Channel Integrity Score covers aspects of traffic misrepresentation, including possible instances of publisher spoofing (e.g., Monarch fraud scheme), SDK spoofing (e.g., Grindr Mobile-To-CTV spoofing scheme), or fake users (e.g., device farms, inactive devices, etc.).

Pixalate has devoted immense resources and capital to mapping various bundle IDs floating around the programmatic ecosystem to the respective app store-specific IDs as recommended by IAB Tech Lab guidelines. By doing so, we are uniquely positioned to achieve a deep understanding of spoofing methodologies and develop detection models; one example of such detection models is evident in our discovery of the Monarch scheme.

- Side-Loaded App Score: Here again, we do not doubt that SpotX and other similarly-situated competitors strive to only work with direct and well-recognized media owners, such that they are not concerned with side-loaded app store impressions. And, once again, SpotX received an “A” for this factor in our recent STI.

But SpotX’s approach is one of several viable paths. We need to be cognizant that, for many industry stakeholders, a sellers’ diligence with respect to the risks associated with side-loaded apps is an important factor. A side-loaded app is an application that is not available through a platform’s official app store. The process of side-loading allows a user to install and run Android apps to which they would not otherwise have access.

Our methodology evaluates the seller’s exposure to the risks associated with side-loaded apps. For example, a Fire TV user could side-load an app that purportedly generates advertising impressions, which has the potential to lead to significant brand safety risk, among other risk factors. It is our opinion that this may be an important factor to consider in assessing the relative strength of various sell-side platforms; hence its inclusion as a factor on our Amazon CTV Seller Trust Index. Our Indexes aim to apply to as many entities as possible, including those with differing philosophies.

3. Transparency

Our mission has always been to share openly new developments, trends, and threats within the industry, and how we are responding. This is why we are very public with our education and insight efforts, such as, to name a few examples, risks, methodology, industry standards, industry discussions, industry questions, and thought leadership for SSAI; and supply chain trends, COVID-19 updates, industry benchmarks, interviews with outside sources for OTT/CTV, and more.

The same is true for our Seller Trust Indexes. We have always been clear about what factors go into our rankings, why we think they are important to the digital media industry, and why – despite being derived from our proprietary systems and methods – our opinions are, ultimately, our opinions. As we make clear in our STIs:

Pixalate is sharing this report not to impugn the standing or reputation of any person, entity, or company, but, instead, to report our opinion, based on thorough data analysis, that we believe to be pertinent to programmatic advertising stakeholders.

In addition, we always welcome discussions regarding our methodology. We obviously cannot disclose our data sets because they are proprietary, nor our specific weighting of factors because that could encourage efforts to “game the rankings,” which wouldn’t be useful to anyone. It is our sincere belief that rating systems are important to the overall health of the industry, especially a budding ecosystem like OTT/CTV.

At the same time, we want to avoid misunderstandings whenever possible. It bears reemphasizing that in no way do Pixalate’s rankings depend on any commercial relationship. To suggest otherwise not only hurts the credibility of the rankings unfairly, but also threatens to diminish the efforts of those companies, whether clients or not, that have appeared at the tops of the lists due to a sustained effort in all facets of reputable digital advertising.

We, as always, are open to continued discussions regarding quality in advertising.

Post updated 7/14/20 for clarification