Authors: Amin Bandeali, CTO; Angelos Lazaris, Chief Data Scientist; Melwin Poovakottu, Data Scientist

Pixalate pioneered quality ratings for digital advertising with the Seller Trust Index. We are now bringing that same concept to mobile and Connected TV (CTV) apps with the Global Publisher Trust Indexes.

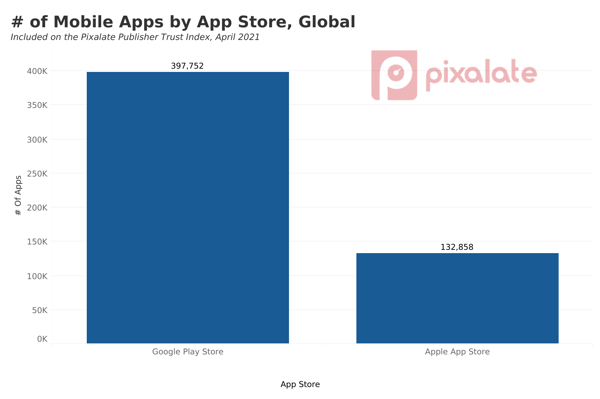

The new CTV & Mobile App quality ratings for the programmatic supply chain are based on Pixalate’s benchmark analysis of over 5 million apps across the Google, Apple, Roku, and Amazon app stores.

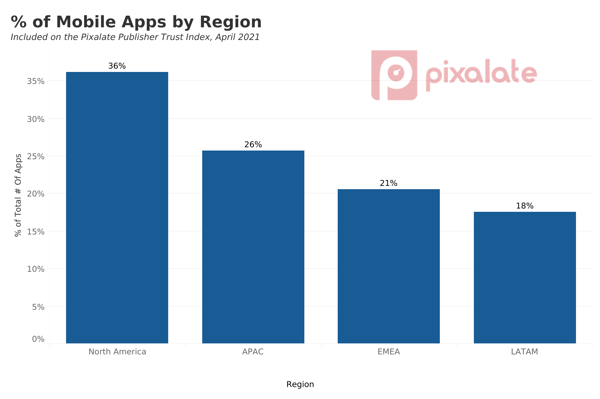

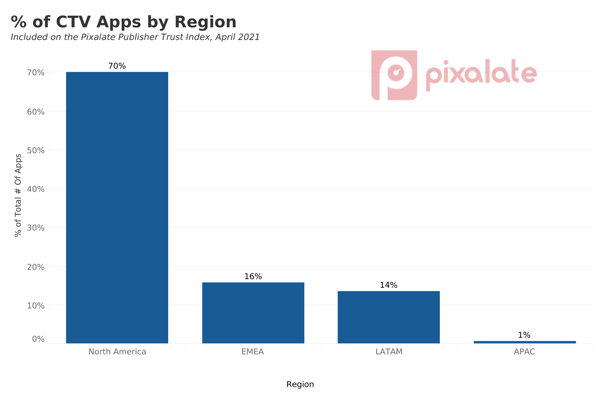

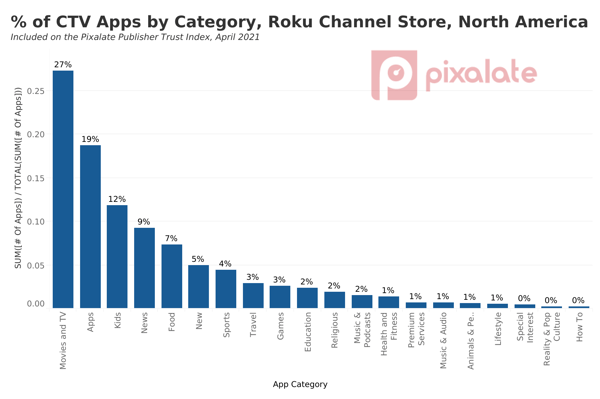

Pixalate's new Publisher Trust Indexes supply quality-based app rankings by region (North America, APAC, EMEA, LATAM, and global) and by app category.

This page details our methodology and FAQs for the Publisher Trust Indexes.

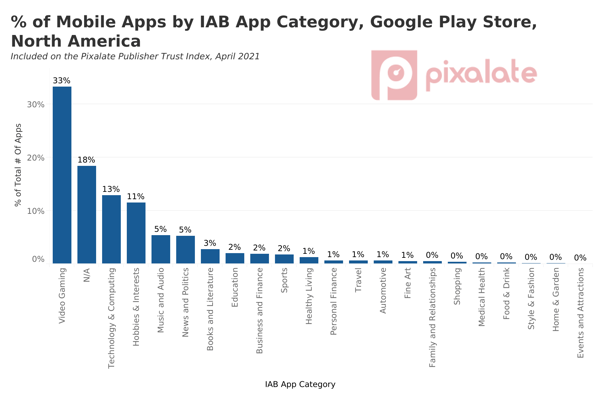

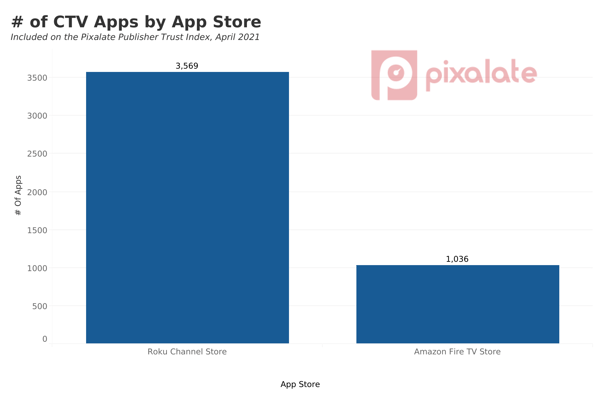

Various statistics & charts from the Publisher Trust Indexes for both CTV and Mobile

mobile apps analyzed

IAB 2.2 mobile app categories

unique indexes

CTV apps analyzed

CTV app categories

global regions

Pixalate's data science team invested years of R&D into devising a comprehensive ratings & ranking system grounded in data

Bandeali oversees the technology, engineering, and data teams at Pixalate. He holds an MSE in Software Engineering & a BSc in Computer Science. He co-founded Pixalate in 2012.

Lazaris leads Pixalate's data science and research teams at Pixalate. He holds a PhD in Electrical Engineering and an MSc and BS in Electronic and Computer Engineering. He joined Pixalate in 2014.

Poovakottu develops data analysis framework models. He holds MS degrees in Information & Data Science and Engineering Management & Computer Management. He joined Pixalate in 2019.

Pixalate's Publisher Trust Index reports present the rank order of various Mobile and CTV apps across broad geographical regions (including NA, EMEA, APAC, and LATAM), publisher categories (e.g., News and Media, etc.), and Operating Systems (OS).

In the indexes, publishers are ranked for their overall quality in terms of programmatic inventory, invalid traffic (IVT), viewability, and more by being assigned an overall score from 0 to 99, incorporating all of the individual scores in each metric. The geographical regions of each index correspond to the location where the impression was generated (i.e., the location of the end-user) and not the location of the publisher which, quite often, is obfuscated.

In order to produce the various rankings, Pixalate analyzed more than 2 trillion data points over a period of one month from advertising across more than 150 countries.

The Mobile Publisher Trust Index provides ratings for various combinations of the following data points:

App Store Name: The App Store name characterizes where the publisher apps can be downloaded from. Currently only the “Apple App Store” and “Google Play Store” are supported.

Region Name: The name of the broader geographical region in which the end-user resides (i.e., this is essentially the location of the end-user generating impressions on a given publisher). The following regions are supported, and the countries that correspond to each region are defined in Appendix 1:

Region Name: The name of the broader geographical region in which the end-user resides (i.e., this is essentially the location of the end-user generating impressions on a given publisher). The following regions are supported, and the countries that correspond to each region are defined in Appendix 1:

Category: This is the category of the publisher (e.g., News and Media, etc.). An “All” category is added to rank publishers across all the categories of a given app store. Categories are determined using the IAB taxonomy.

Category: This is the category of the publisher (e.g., News and Media, etc.). An “All” category is added to rank publishers across all the categories of a given app store. Categories are determined using the IAB taxonomy.

The main columns used to identify a publisher are the “appId'' and the “title”. AppID is unique for a given app store. For every appId, the following metrics are produced, characterizing its score compared to other publishers:

The main columns used to identify a publisher are the “appId'' and the “title”. AppID is unique for a given app store. For every appId, the following metrics are produced, characterizing its score compared to other publishers:

- Programmatic Reach Score: This score corresponds to a composite measure of programmatic popularity that is calculated in terms of both reach and inventory availability. It is a number between 0 to 99 indicating how extensive user coverage and inventory an app has compared to its competitors.

- IVT Score: This score corresponds to the percentage of IVT of the publisher compared to other publishers in a given category and GEO (i.e., other publishers in the same category).

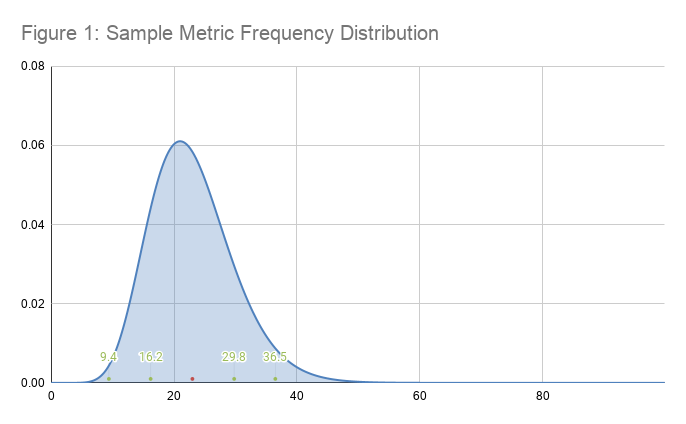

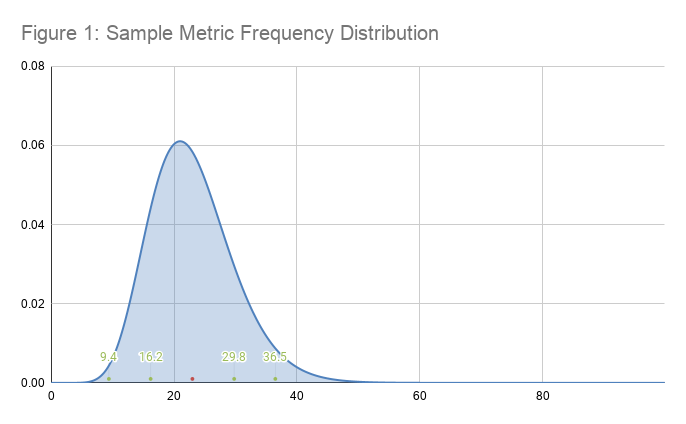

- Ads.txt Score: This score corresponds to the detected presence of ads.txt for a given publisher, as well as the presence of a “balanced” mix of sellers and resellers compared to its competitors. For this, Pixalate has developed a methodology for building a scoring curve for ads.txt that assigns a score for each combination of total sellers and resellers for a given publisher. The main rationale is that too many resellers may increase the risk for IVT, and too few sellers or resellers may limit the availability of the app's inventory to the open programmatic marketplace (see, for example, Figure 1. grading curve). A balanced combination of the two may provide the best results.

- Brand Safety Score: This score captures brand unsafe keywords or brand unsafe advisories declared by a given app in their app store page.

- Permissions Score: This score corresponds to the increase in the likelihood of the presence of IVT when specific permissions are given to a given app that are not common for its category. Permissions that can be risky for a given category might be necessary for the operation of the app in another category, and this kind of information is taken into consideration in order to derive this score. Note: this score is currently only available for Android.

- Viewability Score: This score corresponds to the performance of the app in terms of its viewability.

- Rank: This corresponds to the rank order of the publisher compared to its competitors within a given app store, category, and region combination.

- Final Score: This score corresponds to the average of all the individual scores mentioned above.

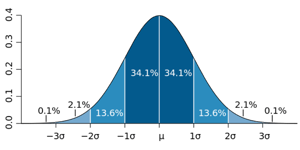

Figure 1. An example of a bell grading curve. If “μ” corresponds to the best value for the x-axis (e.g., number of direct sellers), then As and Bs are going to be assigned to the publishers with values around μ+σ and μ-σ. On the other hand, if the best value on the x-axis is after 2σ (e.g. for the case of IVT), then the As will be assigned to the publishers in the right tail of the distribution.

- Programmatic Reach Score: This score corresponds to a composite measure of programmatic popularity that is calculated in terms of both reach and inventory availability. It is a number between 0 to 99 indicating how extensive user coverage and inventory an app has compared to its competitors.

- IVT Score: This score corresponds to the percentage of IVT of the publisher compared to other publishers in a given category and GEO (i.e., other publishers in the same category).

- Ads.txt Score: This score corresponds to the detected presence of ads.txt for a given publisher, as well as the presence of a “balanced” mix of sellers and resellers compared to its competitors. For this, Pixalate has developed a methodology for building a scoring curve for ads.txt that assigns a score for each combination of total sellers and resellers for a given publisher. The main rationale is that too many resellers may increase the risk for IVT, and too few sellers or resellers may limit the availability of the app's inventory to the open programmatic marketplace (see, for example, Figure 1. grading curve). A balanced combination of the two may provide the best results.

- Brand Safety Score: This score captures brand unsafe keywords or brand unsafe advisories declared by a given app in their app store page. Apps with fewer brand safety risks will achieve a higher score in this category.

- Viewability Score: This score corresponds to the performance of the app in terms of its viewability. Apps with higher viewability will achieve a higher score in this category.

- Rank: This rank corresponds to the rank order of the publisher compared to its competitors within a given Platform Name and Category combination.

- Rank: This corresponds to the rank order of the publisher compared to its competitors within a given app store, category, and region combination.

- Final Score: This score corresponds to the average of all the individual scores mentioned above.

Figure 1. An example of a bell grading curve. If “μ” corresponds to the best value for the x-axis (e.g., number of direct sellers), then As and Bs are going to be assigned to the publishers with values around μ+σ and μ-σ. On the other hand, if the best value on the x-axis is after 2σ (e.g. for the case of IVT), then the As will be assigned to the publishers in the right tail of the distribution.

The CTV Publisher Trust Indexes provides ratings for various combinations of the following data points:

Platform Name: the platform characterizing the mobile device (currently Roku and Fire TV are supported).

Region Name: the name of the broader geographical region that the end-user resides (i.e., essentially the location of the end-user generating impressions on a given publisher).

Region Name: the name of the broader geographical region that the end-user resides (i.e., essentially the location of the end-user generating impressions on a given publisher).

Primary Genre Name: This is the category of the publisher (e.g., News and Media, etc). An "All” category is added in order to rank publishers across all the categories of a given platform. Categories are defined using the app categories as provided by Roku and Amazon Fire TV, respectively.

The main columns used to identify a publisher are the “appId'' and the “title”. AppID is unique for a given platform. For every appId, the following metrics are produced, characterizing its score compared to other publishers:

Metrics Used on the Publisher Trust Index for Roku Channel Store apps

Metrics Used on the Publisher Trust Index for Roku Channel Store apps- Programmatic Reach Score: This score corresponds to a composite measure of programmatic popularity that is calculated in terms of both reach and inventory availability. It is a number between 0 to 99 indicating how extensive user coverage and inventory an app has compared to its competitors. The score to grade assignment is shown in Table 1 below. Apps with more extensive user coverage compared to competitors will achieve a higher score in this category.

- IVT Score: This score corresponds to the percentage of IVT of the publisher compared to other publishers in a given category and GEO. Apps with less IVT will achieve a higher score in this category.

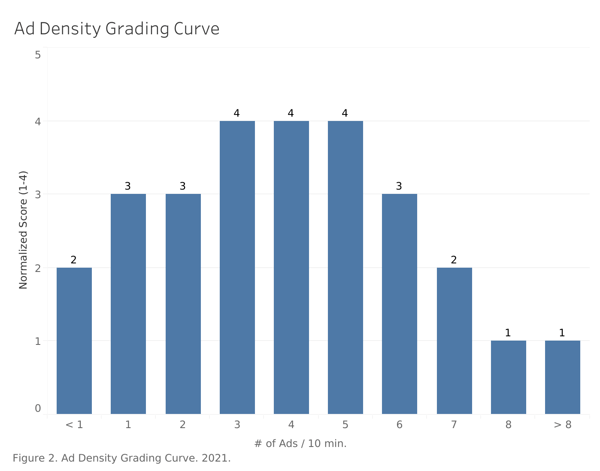

- Ad Density Score: This score characterizes the number of ads (i.e., ad density) per 10-minute video time. This can be seen as a tradeoff between maximization of revenue on the advertising ecosystem side while minimizing the user disturbance. Pixalate has developed a method for assigning scores to various ad density values. See Figure 2 in The Methodology of our Ratings & Metrics section for more.

- User Engagement Score: This score captures the user engagement with a given app in terms of time spent consuming content. Apps that have a higher average watch time will have a higher score in this category.

- Rank: This corresponds to the rank order of the publisher compared to its competitors within a given app store, category, and region combination.

- Final Score: This score corresponds to the average of all the individual scores mentioned above.

Metrics Used on the Publisher Trust Index for Amazon Fire TV apps

Metrics Used on the Publisher Trust Index for Amazon Fire TV apps- Programmatic Reach Score: This score corresponds to a composite measure of programmatic popularity that is calculated in terms of both reach and inventory availability. It is a number between 0 to 99 indicating how extensive user coverage and inventory an app has compared to its competitors. The score to grade assignment is shown in Table 1 below. Apps with more extensive user coverage compared to competitors will achieve a higher score in this category.

- IVT Score: This score corresponds to the percentage of IVT of the publisher compared to other publishers in a given category and GEO. Apps with less IVT will achieve a higher score in this category.

- Ad Density Score: This score characterizes the number of ads (i.e., ad density) per 10-minute video time. This can be seen as a tradeoff between maximization of revenue on the advertising ecosystem side while minimizing the user disturbance. Pixalate has developed a method for assigning scores to various ad density values. See Figure 2 in The Methodology of our Ratings & Metrics section for more.

- User Engagement Score: This score captures the user engagement with a given app in terms of time spent consuming content. Apps that have a higher average watch time will have a higher score in this category.

- Rank: This corresponds to the rank order of the publisher compared to its competitors within a given app store, category, and region combination.

- Final Score: This score corresponds to the average of all the individual scores mentioned above.

For each index, in addition to assigning a ratings score for each metric, we also assign a letter grade based on those ratings scores. Hover over each letter grade to learn how the grades are assigned.

This section presents the methodology used to generate scores for metrics that have more involved definitions and can lead to misinterpretation if not carefully defined.

Pixalate collects data from various sources across the whole advertising ecosystem that range from ad agencies and DSPs, to SSPs, exchanges, and publishers. Specifically, Pixalate collects more than 2 Trillion data points from impression level data characterizing sellers, publishers and users across the globe. This gives us a unique vantage point that can leverage real measurement data in order to assess the quality of the various publishers and the level of trust that can be given by programmatic buyers.

Pixalate uses the following process to incorporate data for quality rankings:

On top of the above steps, Pixalate processes data in terms of Invalid Traffic (IVT) as follows:

Table 2: Ad Density Scoring Table

|

#Ads/10min |

Normalized Score (1-4) |

|

< 1 |

2 |

|

1 |

3 |

|

2 |

3 |

|

3 |

4 |

|

4 |

4 |

|

5 |

4 |

|

6 |

3 |

|

7 |

2 |

|

8 |

1 |

|

>9 |

1 |

Figure 3.

The brand safety scoring metric of Publisher Trust Index indexes aims to capture apps with increased likelihood of adult, drug, violence, alcohol, hate speech, or gambling content. To do so, Pixalate relies on information from the two major app stores that characterize the app’s content advisory ratings, as well as text analysis on the description, title, or image captions. Examples of brand unsafe advisories include “Frequent/Intense Sexual Content or Nudity”, “Mature 17+”, “Adults only 18+”, etc.

IVT scoring corresponds to the percentage of invalid traffic (IVT) of the publisher compared to its competition in a given category and geography.

An app permission is a mechanism for controlling access to a system- or device-level function through the device’s operating system. Permissions can often have privacy implications (e.g., access to fine-grained location). App permissions help support user privacy by protecting access to the following:

- Restricted data, such as system state and a user's contact information.

- Restricted actions, such as connecting to a paired device and recording audio.

Monthly Active Users (MAU) is a metric commonly used to characterize a publisher in terms of the users that have engaged with an app over a period of a calendar month or 30 days. MAU can be used as a metric that captures the growth of a given publisher. From the advertising perspective, MAU can be used as an upper bound of the number of distinct users that are expected to generate impressions assuming that all the user’s ad requests have been filled. However, since this might not be always the case, Pixalate has followed a different approach and defined programmatic MAU (pMAU) as the monthly active users that have generated programmatic traffic.

In order to estimate the MAU (and its programmatic counterpart) for a given publisher, Pixalate has developed Machine Learning (ML) models that generate MAU predictions given various data points that characterize the publisher such as: a) app store information, b) user growth information, c) impression-level data, and d) various other composite metrics that are generated after removing any potential sampling biases in order to provide robust estimates. In the core of the MAU prediction system lies a modeling ensemble that combines predictions from many individual models and generates a single prediction for every given publisher.

User Engagement scoring captures the user engagement with a given app in terms of time spent consuming content.

Viewability scoring aims to score the performance of a given publisher in terms of the percentage of display impressions being viewed. For this score, Pixalate relies on its impression data and assigns scores in proportion to the viewability performance of a given publisher, after all the IVT has been removed (per MRC viewability guidelines).

In order to determine the category of a given app, we rely on information shown in its app store page. In general, the categories listed in the app stores can be used without any further processing. However, there are two issues that need some additional handling:

The first issue is addressed by allowing an app to be ranked in multiple categories. This serves also the purpose of “discoverability” and allows it to be listed and ranked for various similar category filters used.

The second issue causes the formation of small categories (i.e. if the category variation is very unpopular) that can result in small or no competitors at all for a given app. Since this will result in less meaningful rankings for certain small categories, Pixalate has developed a category aggregation framework that merges small categories into a bigger parent one with the intent to produce more meaningful rankings. The full list of categories being merged under a parent one is shown in the following table:

|

Aggregated Category |

App Store Original Category |

|

Apps |

Accessories & Supplies, Activity Tracking, Alarms & Clocks, All-in-One Tools, Audio & Video Accessories, Battery Savers, Calculators, Calendars, Communication, Currency Converters, Customization, Device Tracking, Diaries, Digital Signage, Document Editing, File Management, File Transfer, Funny Pictures, General Social Networking, Meetings & Conferencing, Menstrual Trackers, Navigation, Offline Maps, Photo & Media Apps, Photo & Video, Photo Apps, Photo-Sharing, Productivity, Reference, Remote Controls, Remote Controls & Accessories, Remote PC Access, Ringtones & Notifications, Screensavers, Security, Simulation, Social, Speed Testing, Streaming Media Players, Themes, Thermometers & Weather Instruments, Transportation, Unit Converters, Utilities, Video-Sharing, Wallpapers & Images, Web Browsers, Web Video, Wi-Fi Analyzers |

|

Beauty |

Beauty & Cosmetics |

|

Books |

Book Info & Reviews, Book Readers & Players, Books & Comics |

|

Business |

Business, Business Locators, Stocks & Investing |

|

Education |

Education, Educational, Flash Cards, Learning & Education |

|

Fashion & Style |

Fashion, Style, Style & Fashion |

|

Finance |

Stocks & Investing |

|

Food |

Cooking & Recipes, Food, Food & Drink, Food & Home, Wine & Beverages |

|

Games |

Arcade, Board, Bowling, Brain & Puzzle, Cards, Casino, Game Accessories, Game Rules, Games & Accessories, Jigsaw Puzzles, Puzzles, Racing, Role Playing, Standard Game Dice, Strategy, Trivia, Words |

|

Health and Fitness |

Exercise, Exercise Motivation, Fitness, Health & Fitness, Health & Wellness, Meditation Guides, Nutrition & Diet, Workout, Workout Guides, Yoga, Yoga Guides |

|

How To |

Guides & How-Tos |

|

Kids |

Kids & Family |

|

Lifestyle |

Crafts & DIY, Furniture, Home & Garden, Hotel Finders, Living Room Furniture, Sounds & Relaxation, TV & Media Furniture, Wedding |

|

Movies & TV |

Action, Adventure, Cable Alternative,Classic TV, Comedy, Crafts & DIY, Crime & Mystery, Filmes & TV, International, Movie & TV Streaming, Movie Info & Reviews, Movies & TV, On-Demand Movie Streaming, On-Demand Music Streaming, Reality & Pop Culture, Rent or Buy Movies, Sci & Tech, Television & Video, Television Stands & Entertainment Centers, Top Free Movies & TV, TV en Español |

|

Music & Audio |

Instruments & Music Makers, MP3 & MP4 Players, Music, Music & Rhythm, Music Info & Reviews, Music Players, Portable Audio & Video, Songbooks & Sheet Music |

|

New |

New, New & Notable, New & Updated, Novelty |

|

News |

Celebrities, Feed Aggregators, Kindle Newspapers, Local, Local News, Magazines, New & Notable, News & Weather, Newspapers, Podcasts, Radio, Weather, Weather Stations |

|

Premium Services |

Most Watched, Top Paid, Featured, 4K Editors Picks, Just Added, Most Popular, Top Free, Recommended |

|

Religious |

Faith-Based, Religião, Religion & Spirituality, Religious |

|

Sports |

Baseball, Cricket, Extreme Sports,Tennis, Golf, Pool & Billiards, Soccer, Sports & Fitness, Sports Fan News, Sports Games, Sports Information |

|

Travel |

Travel Guides, Trip Planners |

The category aggregation above can produce meaningful large categories. However, in cases of small countries with limited numbers of apps generating advertising traffic, it is possible to have categories with a small number of apps. For this, Pixalate uses a threshold of 30 apps minimum per country and category combination in order to produce publisher rankings.

Introducing the Clustering-Based Generative Non-Linear Model

Publisher Trust Index Ratings introduce an additional scoring layer that combines individual scores into a single final score that is used to rank publishers. The main idea here is that we can use a single score to capture many different metrics together and provide a holistic view of a publisher, and then use this to rank publishers and compare their overall performance.

Even though the main idea of quality Ratings is simple, it is extremely challenging due to:

In order to overcome the above challenges, Pixalate has developed a hierarchical approach that provides:

The rationale is that comparisons within such segments are more meaningful and usually the consumer of such Ratings usually focuses on one segment at a time. However, rating publishers within a segment can still create challenges due to the very diverse dynamics of the publishers in terms of scale, performance, and quality overall. For this, Pixalate has developed a novel framework for ranking thousands or millions of publishers by introducing a Clustering-Based Generative Non-Linear Model that leverages a clustering algorithm in order to:

The resulting clustering framework can be summarized in the following steps:

Pixalate provides ratings for apps found in the Google Play Store (mobile), Apple App Store (mobile), Roku Channel Store (CTV), and Amazon Fire TV App Store (CTV) provided they belong to one of the following categories:

Pixalate analyzes data gathered from billions of programmatic advertising transactions. Publishers identified by this data are scored in each category, and if enough data exist, they are ranked against other publishers.

To come.

No, Pixalate does not charge publishers to participate in our Publisher Trust Index or see certain data points. Request a free Publisher Diagnostic Report here.

Pixalate provides publishers rated on our Publisher Trust Index with a free Publisher Diagnostic Report to help them identify potential ways to improve quality. Request a free Publisher Diagnostic Report here.

No.

Pixalate collects advertising data from multiple sources across the entire advertising stack, including everyone from ad agencies and DSPs, to SSPs, exchanges, and publishers.

Many of our clients deploy our data analytics solution to gain valuable insights about the advertising opportunities and impressions trafficked by them or to them. While individual client data is restricted for use to that customer only, the data in aggregate helps to create our ratings.

Unfortunately, not all definitions and measurements of ad fraud are created equal. Company reputations may also be prone to hearsay and commentary. Pixalate works with other companies in the industry and participates with the IAB and MRC to employ a rigorous common definition of invalid traffic. As standards and measurement are still evolving, though, Pixalate may simply measure invalid traffic differently than another source. We’ve learned a lot from our data over time and have made corrections where we’ve been wrong. As a result, we believe our measurements are as solid as you will find in the business.

The Pixalate Top 100™ rankings can be sliced by global region and by app category. Choose a pivot below to learn more about the data associated with the related indexes.

The content of this document, and the Publisher Trust Indexes (collectively, the "Indexes"), reflect Pixalate's opinions with respect to factors that Pixalate believes may be useful to the digital media industry. The Indexes examine programmatic advertising activity on mobile apps and Connected TV (CTV) apps (collectively, the “apps”). As cited in the Indexes, the ratings and rankings in the Indexes are based on a number of metrics (e.g., “Brand Safety”) and Pixalate’s opinions regarding the relative performance of each app publisher with respect to the metrics. The data is derived from buy-side, predominantly open auction, programmatic advertising transactions, as measured by Pixalate. The Indexes examine global advertising activity across North America, EMEA, APAC, and LATAM, respectively, as well as programmatic advertising activity within discrete app categories. Any insights shared are grounded in Pixalate's proprietary technology and analytics, which Pixalate is continuously evaluating and updating. Any references to outside sources in the Indexes and herein should not be construed as endorsements. Pixalate's opinions are just that, opinions, which means that they are neither facts nor guarantees; and neither this press release nor the Indexes are intended to impugn the standing or reputation of any person, entity or app.

As used in the Indexes and herein, and: (i) per the MRC, the term “'Fraud' is not intended to represent fraud as defined in various laws, statutes and ordinances or as conventionally used in U.S. Court or other legal proceedings, but rather a custom definition strictly for advertising measurement purposes;” and (ii) per the MRC, “'Invalid Traffic' is defined generally as traffic that does not meet certain ad serving quality or completeness criteria, or otherwise does not represent legitimate ad traffic that should be included in measurement counts. Among the reasons why ad traffic may be deemed invalid is it is a result of non-human traffic (spiders, bots, etc.), or activity designed to produce fraudulent traffic.”

Disclaimer: The content of this page reflects Pixalate’s opinions with respect to the factors that Pixalate believes can be useful to the digital media industry. Any proprietary data shared is grounded in Pixalate’s proprietary technology and analytics, which Pixalate is continuously evaluating and updating. Any references to outside sources should not be construed as endorsements. Pixalate’s opinions are just that - opinion, not facts or guarantees.

Per the MRC, “'Fraud' is not intended to represent fraud as defined in various laws, statutes and ordinances or as conventionally used in U.S. Court or other legal proceedings, but rather a custom definition strictly for advertising measurement purposes. Also per the MRC, “‘Invalid Traffic’ is defined generally as traffic that does not meet certain ad serving quality or completeness criteria, or otherwise does not represent legitimate ad traffic that should be included in measurement counts. Among the reasons why ad traffic may be deemed invalid is it is a result of non-human traffic (spiders, bots, etc.), or activity designed to produce fraudulent traffic.”